Robustness

Lecture

Mon., Mar. 18

Refresher

Today

Refresher

Robustness motivation

Robustness metrics

Critiques and alternative perspectives

Logistics

Course organization

- Module 1: background knowledge (climate risk, etc.)

- Module 2: quantitative tools for decision-making under uncertainty

- Module 3: getting more philosophical and practical

Notation

Why do we use computers?

Your turn

Robustness motivation

Today

Refresher

Robustness motivation

Robustness metrics

Critiques and alternative perspectives

Logistics

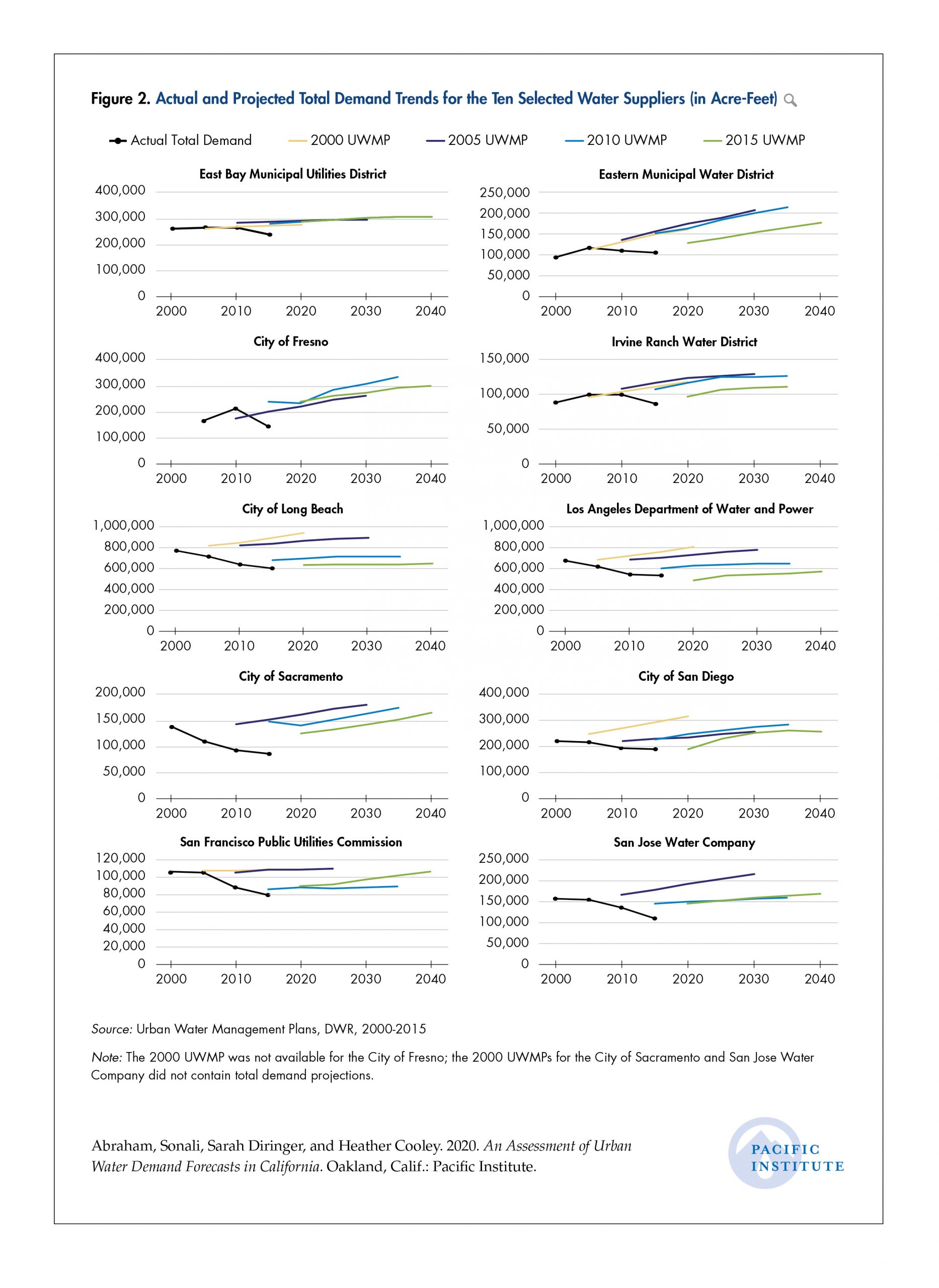

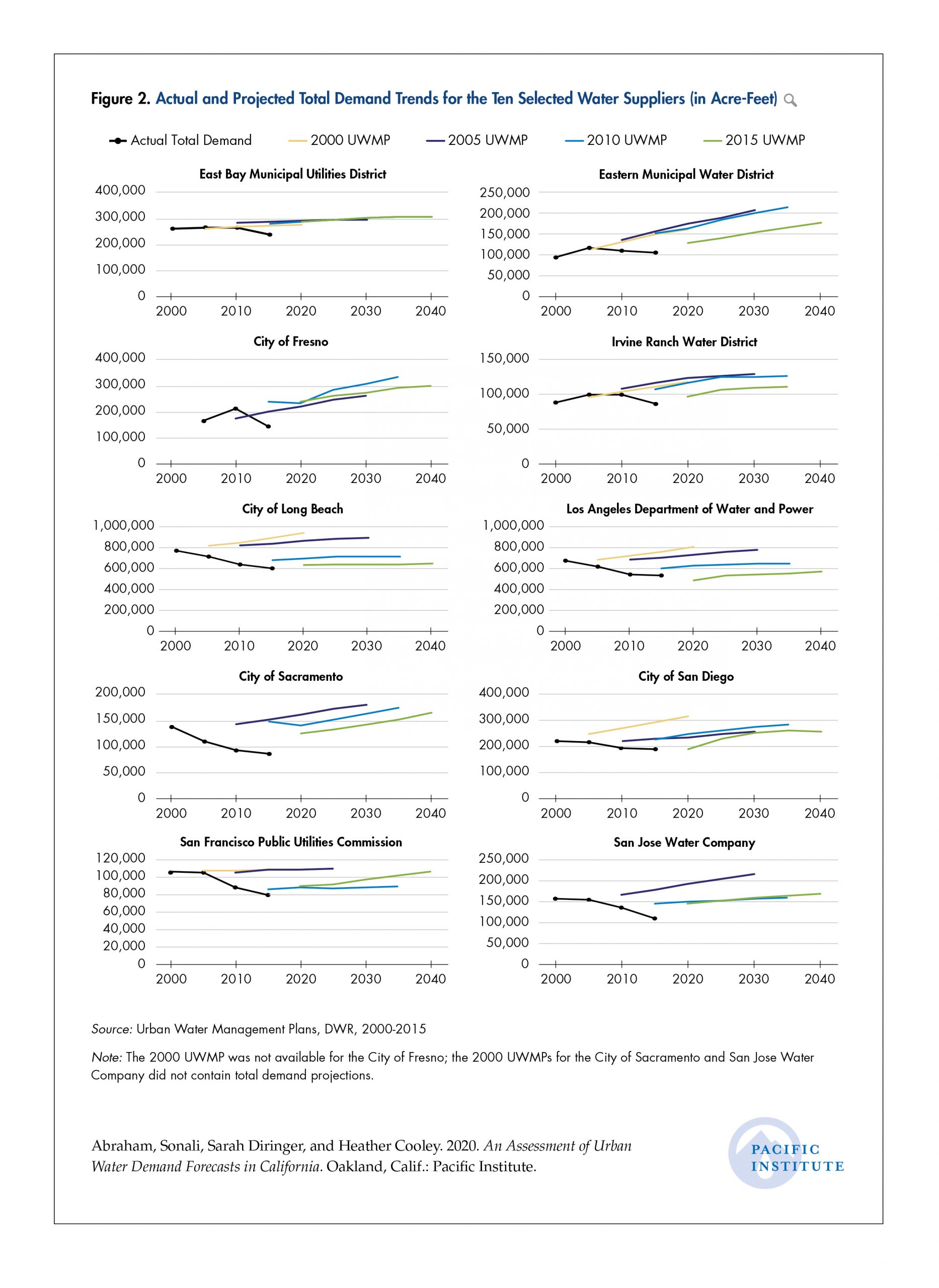

The problem

Abraham et al. (2020) show that water utilities systematically over-estimate future demand. Using a single, certain, forecast of future water demand might motivate over-building infrastructure.

Robustness

We want to make choices / design infrastructure that are robust to errors in demand forecasts.

Definition

The insensitivity of system design to errors, random or otherwise, in the estimates of those parameters affecting design choice (Matalas & Fiering, 1977)

Mathematical definitions differ dramatically, however (@ Herman et al., 2015)! Today we will discuss some overlapping perspectives and ideas about robustness.

Bottom-up analysis

- Top-down, certain: experts develop a “best” forecast of future conditions, then choose a design that is optimal under that forecast

- Top-down, uncertain: experts assign likelihoods to uncertain states of the world, then choose a design that optimizes expected performance (Herman et al., 2015)

- Bottom-up: first explore to identify SOWs a solution is vulnerable to, then assess likelihood (more Wednesday!)

Robustness metrics

Today

Refresher

Robustness motivation

Robustness metrics

Critiques and alternative perspectives

Logistics

Taxonomy

Herman et al. (2015)

Regret

Regret measures how sorry you are with your choice. There are two main definitions (Herman et al., 2015):

- Deviation of a single solution in the real world or a simulated SOW from its baseline (expected) performance

- Difference between the performance of a solution in the real world or a simulated SOW and the best possible performance in that SOW

Satisficing

Satisficing measures whether solutions achieve specific minimum requirements, condensing performance into a binary “satisfactory” or “unsatisfactory”.

- With many SOWs, many studies use a domain criterion: over what fraction of SOWs does a solution satisfy a performance threshold (Herman et al., 2015)?

- Note: this is equivalent to asking what is the probability that a solution satisfies a performance threshold, although many people who calculate robustness metrics are allergic to the word “probability”

- More complex satisficing criteria: see McPhail et al. (2019).

Critiques and alternative perspectives

Today

Refresher

Robustness motivation

Robustness metrics

Critiques and alternative perspectives

Logistics

Parameters?

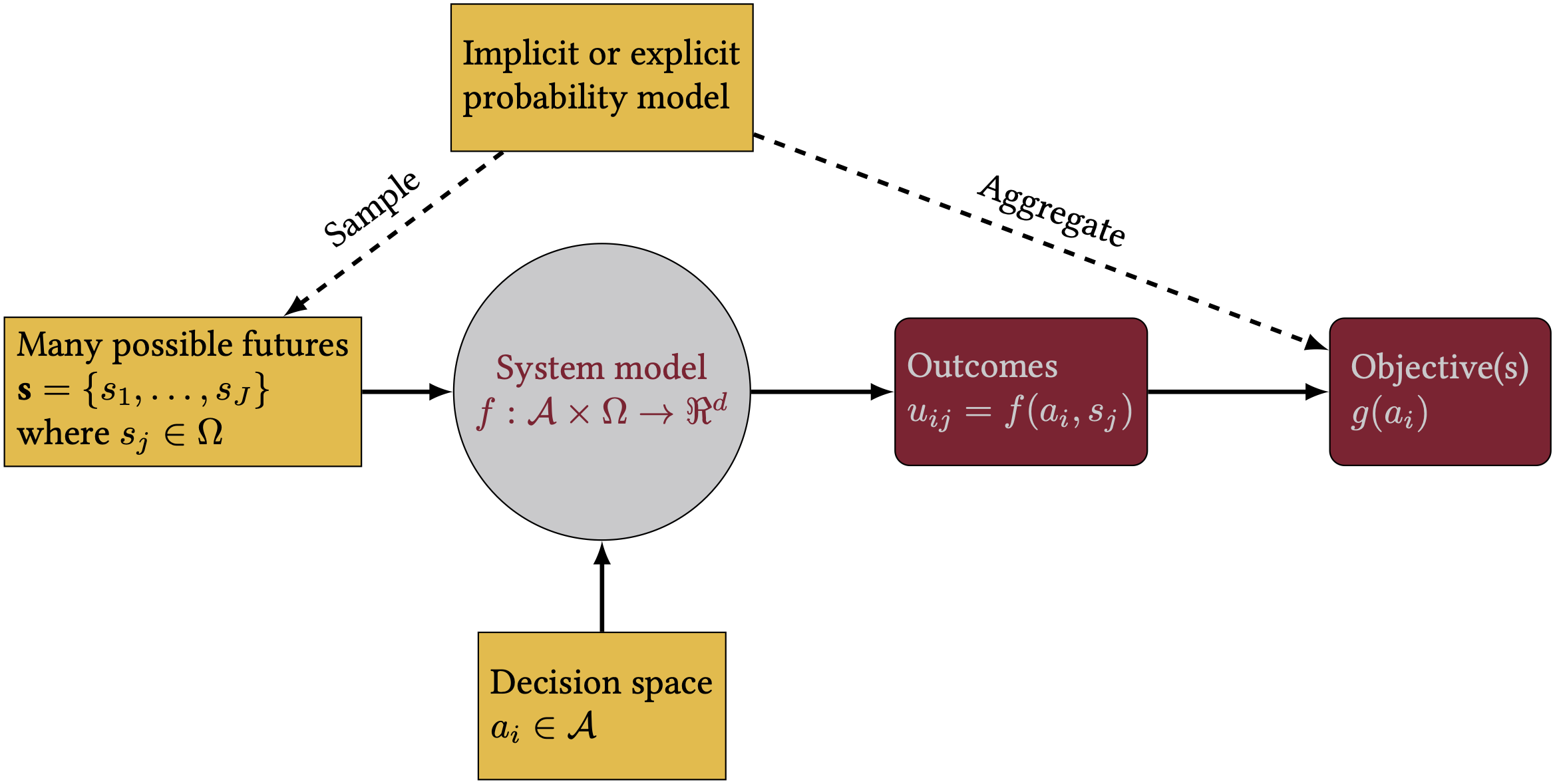

The robustness metrics we’ve seen are defined in terms of parameters: we have a model with parameters, and we define SOWs as different values of those parameters.

Is this a good way to quantify our conceptual ideas about robustness?

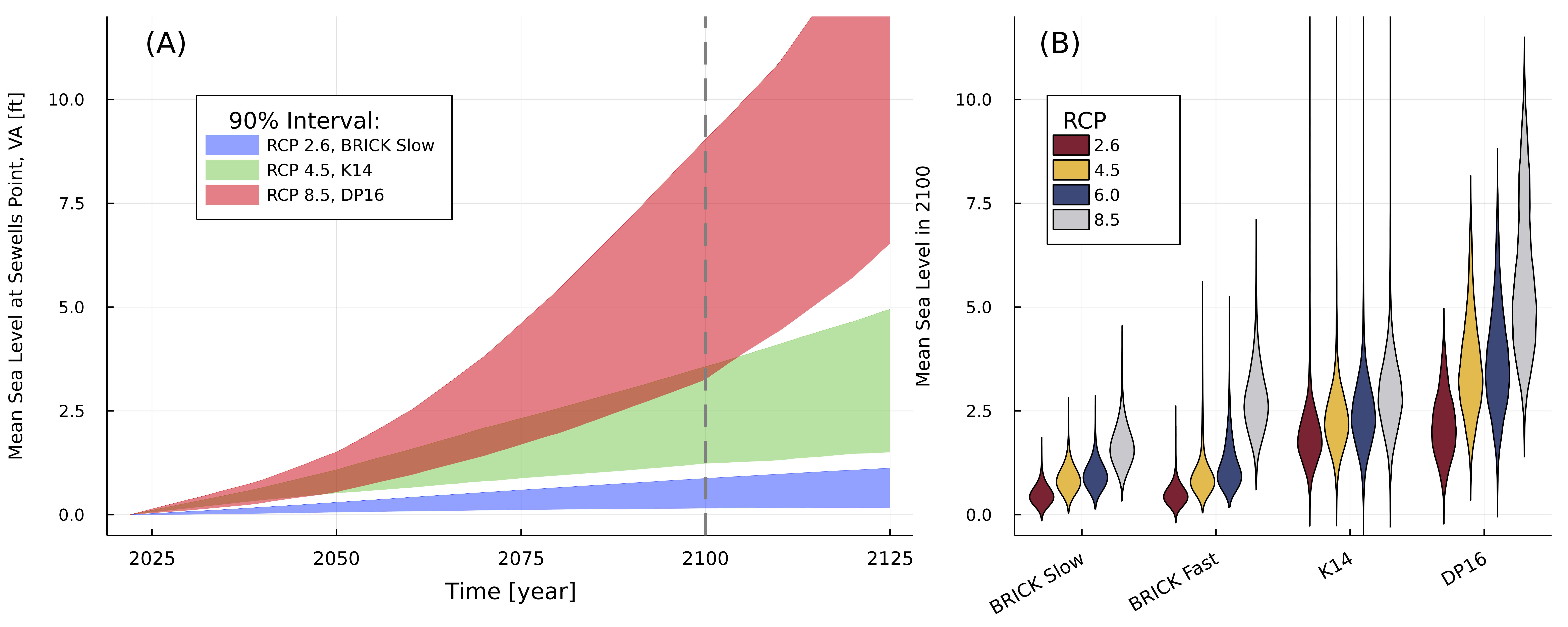

Combinations of uncertainties

In practice, we often have a combination of parametric uncertainties and “model structure” uncertainties (Doss-Gollin & Keller, 2023). And not all SOWs are equally likely!

Climate scenario uncertainties are “deep” (more next week!), but it would be a mistake to say we don’t know anything and all futures are equally likely (Hausfather & Peters, 2020)

Alternative perspective

- Using “prior beliefs” assign likelihoods to different SOWs

- Use quantitative toolkit (optimization, sensitivity analysis, etc.)

- Vary prior belefs: a solution that is robust to different probability distributions rather than to different parameter values.

Logistics

Today

Refresher

Robustness motivation

Robustness metrics

Critiques and alternative perspectives

Logistics

Exam 2

- Exam 2 was scheduled for 3/22

- It will be postponed to 3/29

- 3/22: review session

- Lake problem lab will be postponed to 4/5

- I will have Exam 1 graded by 3/22 so you know where you stand!

Final project

In your final project, you will add something that’s misisng to the house-elevation problem. This might be:

- More complexity / realisim for a model component (e.g., depth-damage function, nonstationary storm surge probability, household financial constraints, etc.)

- A new model component (e.g., a better decision alternative, etc.)

- Applying a decision tool from class (e.g., sequential decision analysis, robustness checks, etc.)

Timeline coming soon – don’t worry about Canvas for now.